Diving into Deep Learning

Summary

- (Update: This series is currently on-pause while I'm focused elsewhere within AI.)

- Overview: This is the index page for my learnings and reflections as I work through the fast.ai course, "Practical Deep Learning for Coders".

- Background: Artificial intelligence has improved exponentially over the past decade, with breakthroughs in data availability, compute performance, and algorithms. We have a new primitive that can and will be used ~everywhere. It's worth learning.

- Motivation: Learning from the past, we should be wary of the dual-use nature of these new capabilities. We need to proactively counteract malicious actors, prevent problematic feedback loops, and think ahead for handling the risks of much more capable systems that will emerge over the next ~5 years.

- Context: I'm focusing on deep learning because it is currently the most generally capable approach to AI we have. It's the foundation behind many of the headlines we see today. Theoretically, the Universal Approximation Theorem tells us that ~any continuous function can be approximated by a neural network. That gives cross-domain problem-solving power.

- Conclusion: To build fundamental knowledge, I am working through the fast.ai course as a starting point and chronicling my learnings as I go for the benefit of others.

The World Has Changed

In just a decade's time, we've gone from laughing at clunky chatbots to superhuman capabilities across a broad range of tasks. We now have omnipresent hardware acceleration and exabytes of data to enable what was once science fiction. We now have semi-autonomous vehicles, an unprecedented revolution in the life sciences, weather forecasts orders of magnitude faster than before, and conversational AI assistants far beyond what Star Trek imagined.

There also are faddish developments. AI is now everywhere, even in places where it's not that useful. It makes the "Excel but with Stories" meme look quaint.

Still, when you zoom out, it's clear that we have a new technological primitive, a foundational capability or tool that can be used to build other ones.

The Dual Use Problem

After realizing all this, I began to get worried.

You see, I have a long-standing interest in military history and technology. One of the fundamental themes there is the dual-use problem. Knowledge and technology that can be used to heal or build can also be used to kill and destroy.

How well are we countering the misuse of new capabilities by malicious actors? Or addressing the problematic feedback loops that can arise in the interactions between humans and algorithms? What of the complex problems that will emerge from autonomous systems interacting with each other?

I worry the answer is not very, especially given the harms that are already possible even without any further research advancements.

For example, a machine learning model used to generate candidate drug molecules can also be used to discover chemical weapons far more potent than the ones that exist today. This has come up before with fertilizer development + explosives, microbiology + bioweapons, and nuclear energy + nuclear power.

I am particularly concerned with how AI lowers barriers when it comes to CBRN (chemical, biological, radiological, nuclear) weapon development. Current nonproliferation regimes (systems to prevent these weapons from falling into unwanted hands) focus on supply chain chokepoints. These are the difficult-to-conceal equipment and inputs needed to make new weapons; the heavily guarded locations of current ones; the list of known experts with weaponization knowledge; and so on.

Advanced AI models throw these constraints out the window. They lower the level of expertise needed and enable the discovery of novel precursors: a harmless-looking chemical compound here, a normal DNA or RNA sequence there. This makes it especially difficult to monitor and combat malicious non-state actors.

Looking even only at pure software use-cases of AI, there is still cause for concern:

- Social media optimizing for engagement and amplifying political polarization and disinformation in the process

- Risk prediction scores that create a feedback loop for recidivism

- Forgery of all content types becoming trivial, enabling unprecedented information warfare

- Just plain incorrect results, as with Epic’s sepsis predictions

We cannot simply dismiss these as "policy problems" that "someone else" will figure out. That's abandoning responsibility that fundamentally begins with us, the technologists pushing the frontiers of possibility. We have the best understanding of runtime reality. If there is a gap in policy, then we need to be the first ones to point it out and propose solutions. We are the ones best positioned to do so and we are the ones most directly responsible for the downstream consequences of not doing so.

These problems become more complex with multimodal and agentic AI. And all of this is before we even consider how widespread automation is upending society and the imminent threat of unaligned artificial general intelligence (AGI).

All in all, I am convinced that I need to learn more. Way more.

Why Deep Learning First?

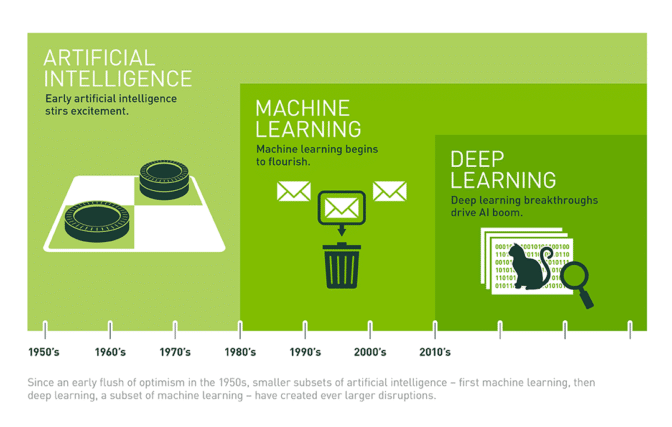

Let's back up and define some terms:

- Artificial intelligence (AI): as a field of study, the academic discipline that focuses on "the study and construction of agents that do the right thing" (Artificial Intelligence: A Modern Approach, 4th edition, p.22). As a noun, a machine system that takes rational action to accomplish a given objective and so demonstrates intelligence.

- Machine learning (ML): an approach to (and subset of) AI that focuses on systems that can learn from examples of problems and their solutions, and then generalize to solving previously-unseen problems without explicit instructions on how to do so.

- Deep learning (DL): a subset of machine learning that uses artificial neural networks to solve problems. These modeled after biological brains, and "deep" in how multi-layered they are.

I'm starting with deep learning specifically because it's the most powerful approach to artificial intelligence we have today. It dominates the state-of-the-art (SOTA) results currently out there.

(Source)

There's a key theoretical result underpinning all this: the Universal Approximation Theorem, which loosely states that "neural networks with a single hidden layer can be used to approximate any continuous function to any desired precision". Translation: even a very simple model of organic computation is surprisingly capable across many different problem domains.

Empirically, artificial neural networks with many layers of neurons tend to be most effective. They encode layers of concepts from the data on which they're trained. For an introduction to the underlying technical fundamentals, see this video playlist.

At an intuitive level, this checks out. We're mirroring the only known form of intelligence we know of today: biological brains. If you're doubtful that we understand the brain well enough to be able to model it to any useful degree, it's time to update your beliefs. See this discussion of predictive coding and the second chapter of this book as a starting point.

Learning in Public

Why am I blogging as I go? A few reasons:

(1) It's a habit tracker to keep me consistently learning and doing. Seeing past progress is very satisfying and drives momentum.

(2) It's an opportunity to capture knowledge just as I understood it. Future-me will inevitably need to review this material at some point. How great would it be to already have a library of knowledge structured from first principles and hands-on examples?

(3) I want to help others learn. By giving a top-down, progressive view of what I've just learned, I can sidestep the Curse of Knowledge. Having just extended my own knowledge tree, I know what branches are needed to explain a new concept to others. I'll be less likely to give a bottoms-up explanation that requires knowledge the other person doesn't have yet. It's why many courses have a learning assistant model for peers helping peers.

Into the future we go!